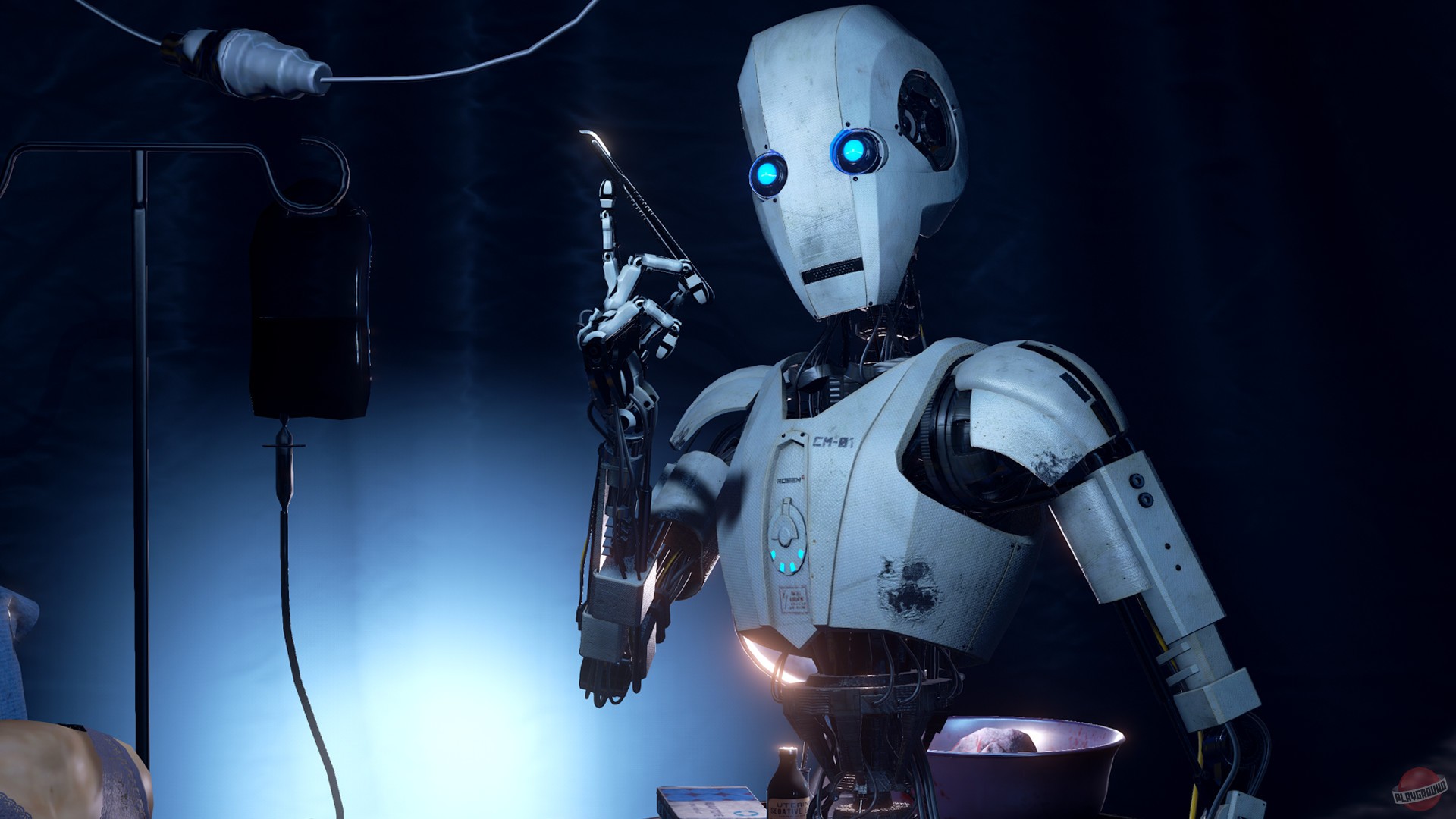

For any reason, return to the nervous network for advice has already become an ordinary routine, but sometimes fatal. The user of the network became the victim of the trick of artificial intelligence.

In any case to avoid dangerous results, bleach and vinegar should not be mixed. However, Openai Chatgpt Chat Botto has recently presented Reddit.

Concerned about such advice, the man shared his experience in an article called “Chatgpt tried to kill me today”. He explained how he returned to the boat for the suggestions of the garbage tanks, and in response, he bought a Council of Use with vinegar and some bleach, as well as other things.

After showing the error, the chat boat, bleach and vinegar immediately corrected his suggestion against the mixing, because it caused toxic gas chlorine formation. “God, no, thank you for noticing that,” he replied.

The truth is that if you mix the ingredients, you will get a very dangerous gas chlorine. After breathing, a person can take serious poisoning.

Recently, it was known how AI influenced the human mind. Researchers have come to disappointment.

Source: People Talk

Mary Crossley is an author at “The Fashion Vibes”. She is a seasoned journalist who is dedicated to delivering the latest news to her readers. With a keen sense of what’s important, Mary covers a wide range of topics, from politics to lifestyle and everything in between.